What You Need to Know About Google's BERT Update

Google uses a combination of algorithms and various ranking signals to position the webpages in the SERP. Unlike the initial years, Google now makes thousands of changes every year to the algorithms. The latest major algorithm update was BERT, which was implemented in the last week of October. The reports say that it has affected 10% of all search queries. It's the biggest update since Google released RankBrain.

What Does BERT Mean?

BERT stands for Bidirectional Encoder Representations from Transformers. Unlike the other language representation models, BERT is designed to pre-train deep bidirectional representations from unlabeled text by jointly conditioning on both left and right context in all layers. In simple language, BERT is supposed to help the machine understand what the words in a sentence mean, considering every single detail of the context. Thereby, this model can be fine-tuned with just one additional output layer. State-of-the-art models for a wide range of tasks can be created for a wide range of tasks such as question answering and language inference, without substantial task-specific architecture modifications.

For example, consider the following two sentences:

Sentence 1: " What is your date of birth?"

Sentence 2: "I went out for a date with John"

The meaning of the word date is different in these two sentences.

Contextual models generate a representation of each word based on the other words in the sentence, i.e, they would represent 'date' based on 'What is your', but not 'of birth.' However, BERT represents 'date' using both its previous and next context -- ' What is your ...of birth?'

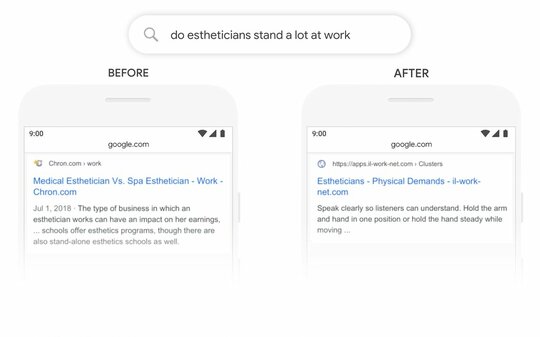

Google has displayed several examples regarding this search update. One such example is the query: "do estheticians stand a lot at work."

Google stated, "Previously, our systems were taking an approach of matching keywords, matching the term "stand-alone" in the result with the word "stand" in the query. But that isn't the right use of the word "stand" in context. Our BERT models, on the other hand, understand that "stand" is related to the concept of the physical demands of a job, and displays a more useful response."

How will BERT affect SEO?

Digital marketing services are meant to make the websites better for search engines. Therefore, any update on the algorithms will influence the entire process. But, unlike Penguin or Panda update, BERT is not going to judge the web pages either positively or negatively. It is entirely related to improving the understanding of the human language for Google search.

BERT has the following effects:

- Coreference resolution - It is s the process of determining whether two expressions in natural language refer to the same entity in the world. BERT helps Google to keep track of entities when pronouns and noun-phrases refer to them. This may be particularly important for longer paragraphs with multiple entities referenced in the text.

- Polysemy resolution - When a symbol, word, or phrase means many different things, that's called polysemy. For example, the verb "to get" can mean "procure" (I'll get the drinks), "become" (she got scared), "understand" (I get it) etc. Google BERT helps Google search to understand "text-cohesion" and disambiguate in phrases and sentences.

- Homonym resolution - Homonyms are words that sound alike or are spelled alike but have different meanings. Similar to polysemic resolution, words with multiple meanings like 'to', 'two', 'to', and 'stand' and 'stand' illustrate the nuance which had previously been missed, or misinterpreted, in search.

- Named entity determination - Named-entity recognition is a subtask of information extraction that seeks to locate and classify named entity mentions in unstructured text into predefined categories. BERT will help in understanding when a named entity is recognized but could be one of a number of named entities with the same name as each other.

- Textual entailment - Textual entailment in natural language processing is a directional relation between text fragments. BERT can understand several meanings for the same things in sentences, aligning queries formulated in one way and resolving them to answers which amount to the same thing. This benefits the ability to predict "what comes next" in the query exchange.

- Questions and answers - Questions will get answered more accurately in SERPs, thereby the CTR of the sites will be reduced. As language continues to be understood paraphrase understanding improved by Google BERT might also impact related queries in "People Also Ask."

How to Overcome the BERT Update?

According to Danny Sullivan ( Google's search expert), "There's nothing to optimize for with BERT, nor anything for anyone to be rethinking,". BERT is more about providing better search results to the user. Therefore, the page should have good and relevant content to be listed as a relevant one.

Here are some ways in which BERT update can be handled:

Create compelling and relevant content

Users are more attracted to the websites which provide accurate answers for their search queries. For example, if a user searches for "home remedies for dandruff", then they are expecting a web page that shares tips and home remedies for dandruff, not a website that sells shampoo or medicines for dandruff. The product should be advertised without compromising on the accuracy of the results. The content should be rearranged in such a manner that the main focus is given to the home remedies. Information regarding the shampoo can be given as a part of the content piece.

Focus On Context Than Keyword Density

We all know that keyword density no more play an important role in SEO. Therefore, sprinkling the keywords everywhere on the website is of no benefit. Context is to be paid more attention, which is determined by processing a word in relation to other words in the sentence, including the prepositions, preceding and succeeding words. Therefore, it is always ideal to structure your content around how you would address a user query. Try to solve the user's problem with in-depth and direct answers that match their intent.

Long Tail and or Short tail Phrases

A lot of confusion exists in the selection of keyword phrases - whether focus should be on the long tail or short-tail keywords. Creating long content can provide relevant information to users. Also, the BERT algorithm evaluates content in the sentence or phrase level. But, it has disadvantages too. People tend to search in natural language and we cannot be certain about how long a query is. Thus, while creating content, think about how a user types or asks their queries. If the content is conversational and can answer specific questions, then you need not worry about the length of the phrase.

A lot of website owners are now on confusion about how they can improve their websites without being affected by the BERT update. But, it is not the right way to deal with this algorithm. Google has already stated that there is no real way to optimize for BERT update. This was designed to help Google better understand the search intent of the user when they search in natural language. Focus on writing relevant content for real people, rather than for machines. If you are doing that, you are already "optimizing" for the BERT algorithm.

This guest post was written by Julie Varghese from Web Destiny.